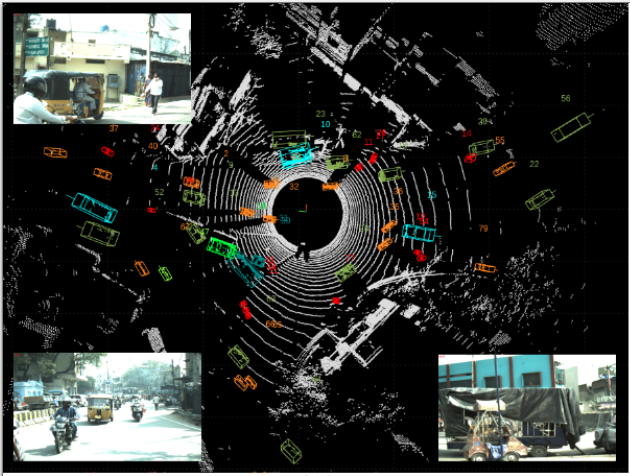

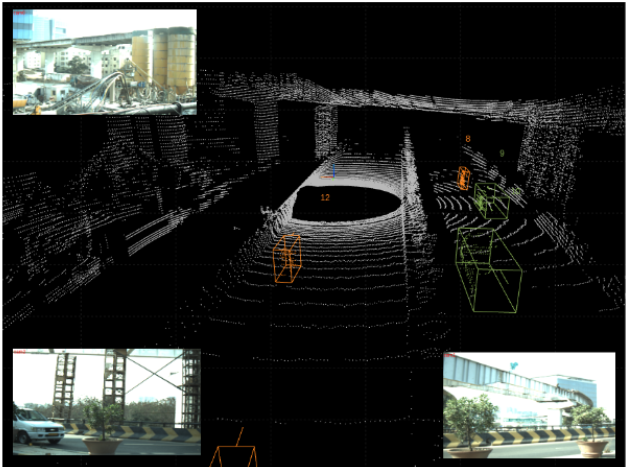

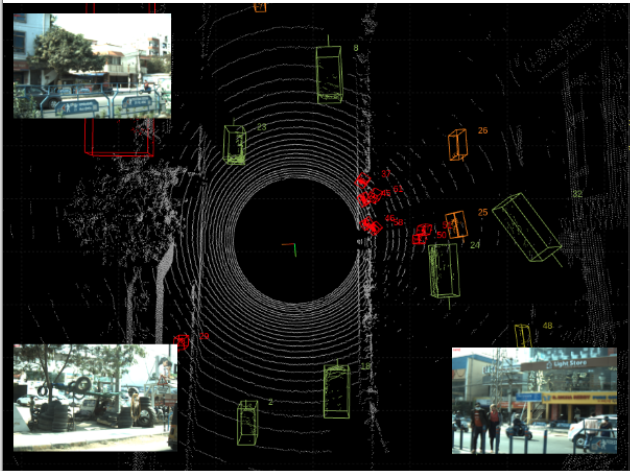

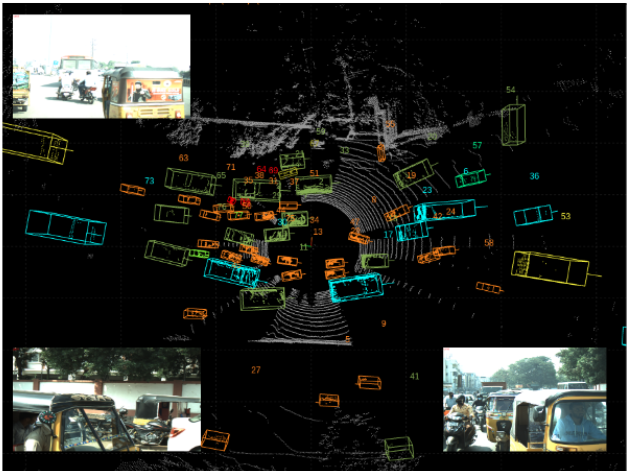

Some examples from the dataset showing different traffic scenarios, LiDAR data with annotations, and a sample of LiDAR point clouds projected on camera data

Abstract

Autonomous driving and assistance systems rely on annotated data from traffic and road scenarios to model and learn the various object relations in complex real-world scenarios. Preparation and training of deploy-able deep learning architectures require the models to be suited to different traffic scenarios and adapt to different situations. Currently, existing datasets, while large-scale, lack such diversities and are geographically biased towards mainly developed cities. An unstructured and complex driving layout found in several developing countries such as India poses a challenge to these models due to the sheer degree of variations in the object types, densities, and locations. To facilitate better research toward accommodating such scenarios, we build a new dataset, {IDD-3D}, which consists of multi-modal data from multiple cameras and LiDAR sensors with 12k annotated driving LiDAR frames across various traffic scenarios. We discuss the need for this dataset through statistical comparisons with existing datasets and highlight benchmarks on standard 3D object detection and tracking tasks in complex layouts.

IDD-3D Summary

IDD-3D is a groundbreaking dataset designed to address the challenges of autonomous driving in unstructured environments. While existing datasets have primarily focused on well-organized, controlled settings, IDD-3D takes a different approach by capturing the complexities of real-world driving scenarios. The dataset is particularly unique for its focus on diverse and chaotic driving conditions, often encountered in cities like Hyderabad, India.

Key Features:

- Diverse Geographical Coverage: IDD-3D is collected across various regions of Hyderabad, encompassing a range of road types, traffic densities, and environmental conditions.

- Rich Annotations: The dataset includes 3D bounding box annotations for approximately 223k objects across 17 categories. This enables a wide array of applications, from object detection to tracking and beyond.

- High-Quality Data: The dataset is meticulously curated, with high-resolution LiDAR and camera sensors capturing over 5 hours of driving data.

- Unique Object Categories: Unlike other datasets that often generalize objects into broad categories, IDD-3D provides a more nuanced classification, including unique vehicle types and pedestrian behaviors commonly seen in unstructured environments.

Some Interesting Cases

We highlight some interesting cases in the images below from the data collection and the traffic scenarios encountered and present in the dataset. The cases highlight different aspects of the dataset, the diversity available, and how the data can be used for different applications.

A case with different vehicle orientations and occlusions in the traffic scene. We can see the heading direction of the vehicles is distributed in multiple directions, rather than being aligned in a small range such as in the case of other datasets.

We highlight multiple levels of drivable surfaces including roads, highways, flyovers etc. The data collection vehicles were driven across different parts of the city to capture the diversity in the road layouts and different heights. It is important to note that the objects in the scene are annotated irrespective of their height as long as they are visible in the LiDAR data. This allows for better understanding of geometric layouyt of the traffic scenes.

This image shows an example of a traffic safety critical scenario where we can see pedestrians crossing the road in front of a vehicle. The dataset includes such scenarios which are important for developing safety critical systems. Analysis of objects in detection and tracking systems can lead to safer roads.

We highlight high density traffic scenarios in the dataset with a higher number of objects based on distance thresholds compared to other popular datasets, especially in unstructured scenarios. The importance of using the LiDAR sensor especially is clear in this scenario where there exists heavy occlusion in the camera FoV due to the heavy traffic condition and the vehicles being in close proximity.

IDD-3D Summary

Experimental Insights:

The paper presents a comprehensive analysis of the dataset using popular object detection methods like CenterPoint, SECOND, and PointPillars. The results validate the robustness and applicability of IDD-3D for developing more adaptive driving systems.

IDD-3D also includes a 3D object tracking evaluation, offering valuable metrics that can be used to understand object motion and behavior in complex scenarios.

Applications Beyond Autonomous Driving:

The dataset is not just limited to autonomous driving; its rich annotations and diverse scenarios make it applicable in other domains like road safety, traffic management, and surveillance.

Poster

BibTeX

@InProceedings{Dokania_2023_WACV,

author = {Dokania, Shubham and Hafez, A. H. Abdul and Subramanian, Anbumani and Chandraker, Manmohan and Jawahar, C. V.},

title = {IDD-3D: Indian Driving Dataset for 3D Unstructured Road Scenes},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2023},

pages = {4482-4491}

}